function darken(color, k) {

return d3.color(color).darker(k).toString();

}

function update(root) {

const t = d3.transition();

root.selectAll('.clusters path').data(voronoi.polygons(centroids))

.transition(t)

.attr('d', d => d == null ? null : 'M' + d.join('L') + 'Z');

root.selectAll('.dots circle')

.transition(t)

.attr('fill', d => color_scheme_1(d.cluster))

.attr('cx', d => xScale(d.x))

.attr('cy', d => yScale(d.y));

root.selectAll('.centers circle')

.transition(t)

.attr('cx', d => xScale(d.x))

.attr('cy', d => yScale(d.y));

}

centroids = {

restart;

return d3.range(k).map(() => {

return {

x: data.map(item => item.x)[getRandomInt(data.length)],

y: data.map(item => item.y)[getRandomInt(data.length)]

}

})

}

voronoi = d3.voronoi()

.x(d => xScale(d.x))

.y(d => yScale(d.y))

.extent([[0, 0], [width, height]])

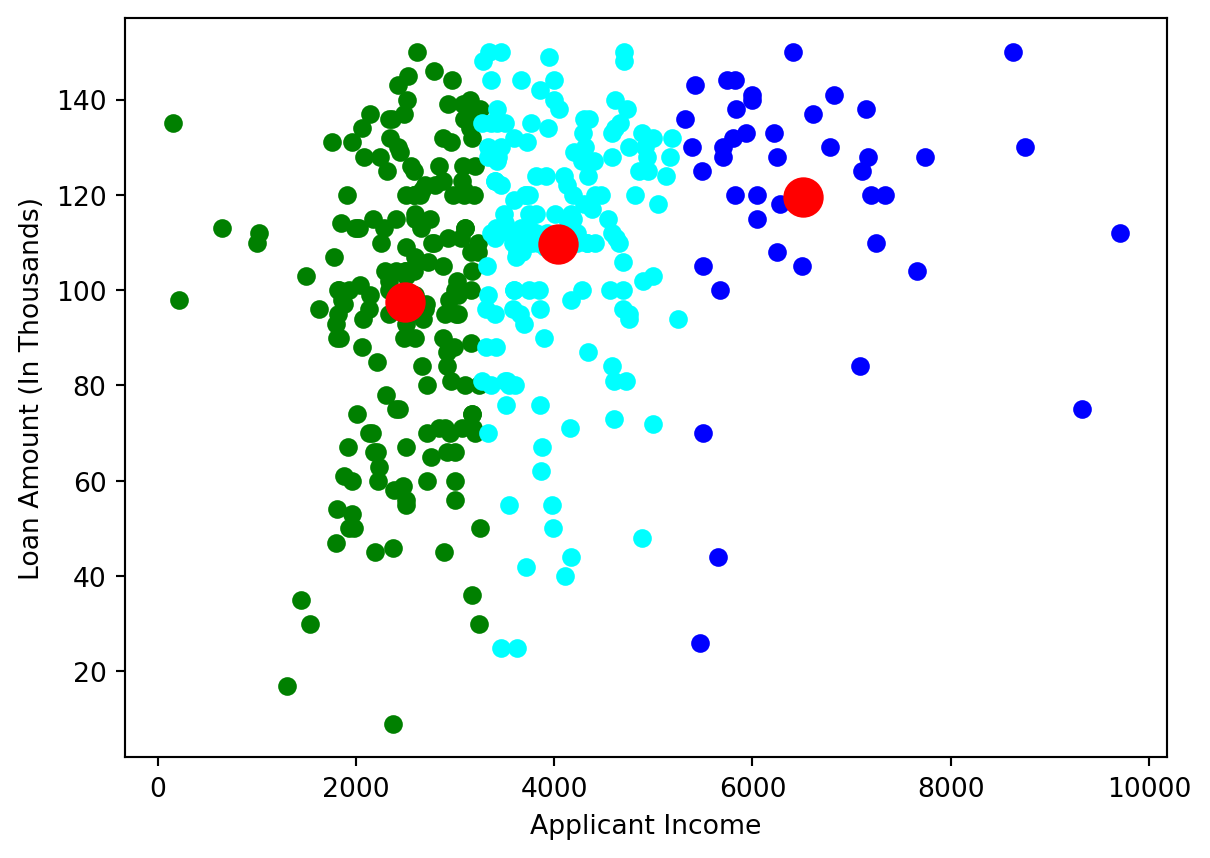

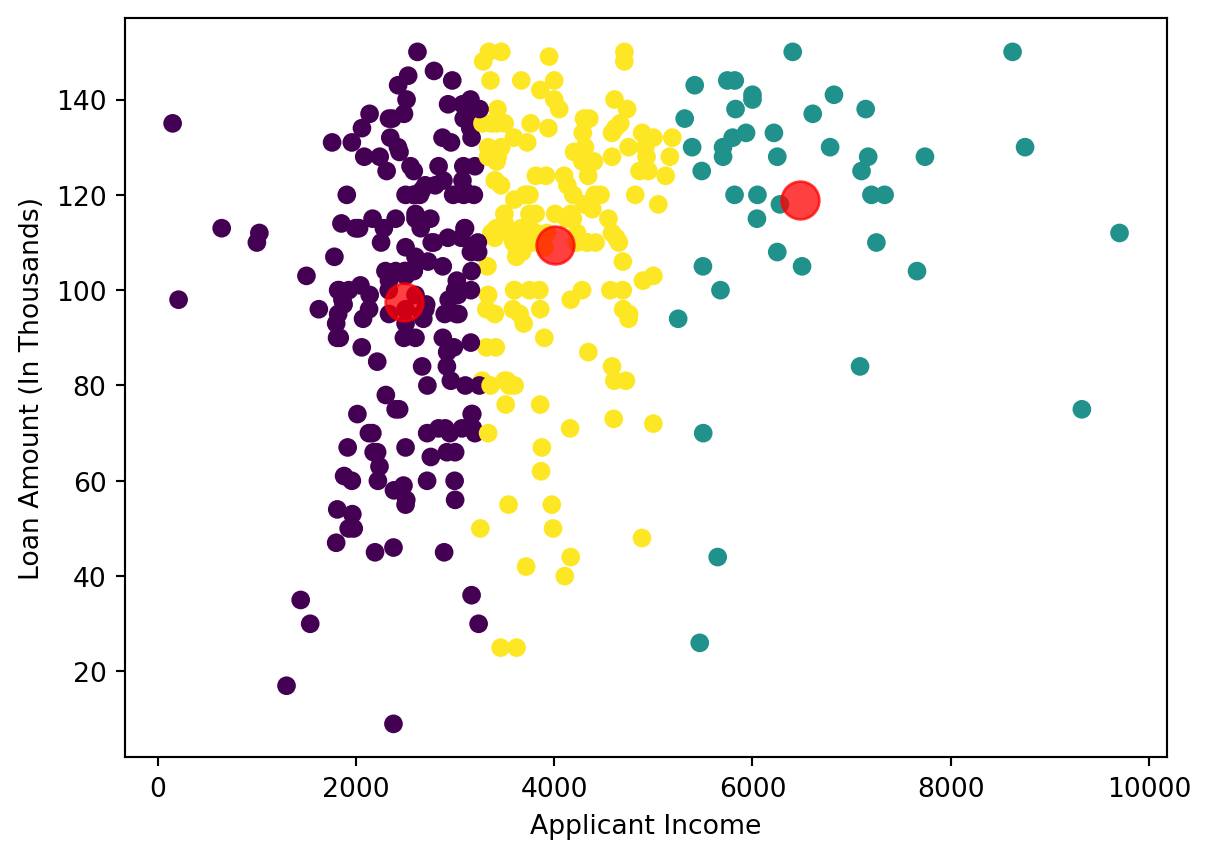

color_labels = ["red", "green", "blue", "yellow", "brown", "orange"]

color_scheme_1 = d3.scaleOrdinal()

.domain(d3.range(k))

.range(color_labels.map(d => darken(d, 0)))

color_scheme_2 = d3.scaleOrdinal()

.domain(d3.range(k))

.range(color_labels.map(d => darken(d, 1)))

function distance(a,b){

return Math.sqrt((a.x - b.x)**2 + (a.y - b.y)**2)

}

function getRandomInt(max_value){

return Math.floor(Math.random(1) * max_value);

}

svg = {

const root = d3.select(DOM.svg(width, height))

.style("max-width", "100%")

.style("height", "auto");

// Clusters

root.append('g').attr('class', 'clusters').selectAll('path')

.data(voronoi.polygons(centroids))

.enter()

.append('path')

.attr('d', d => d == null ? null : 'M' + d.join('L') + 'Z')

//.attr('fill', 'none')

.attr('fill', (d, i) => color_scheme_1(i))

.attr('fill-opacity', 0.3)

.attr('stroke-width', 0.5)

.attr('stroke', '#000');

// Dots

root.append('g').attr('class', 'dots').selectAll('circle').data(data)

.enter()

.append('circle')

.attr('stroke', '#000')

.attr('stroke-width', 0)

.attr('fill-opacity', 1.0)

.attr('r', 3)

.attr('fill', d => color_scheme_2(d.cluster))

.attr('cx', d => xScale(d.x))

.attr('cy', d => yScale(d.y));

// Centers

root.append('g').attr('class', 'centers').selectAll('circle').data(centroids)

.enter()

.append('circle')

.attr('r', 5)

.attr('fill', '#000')

.attr('fill-opacity', 0.7)

.attr('cx', d => xScale(d.x))

.attr('cy', d => yScale(d.y));

// Update

update(root);

return root;

}

svg.node()